views

Horror, anger, disbelief and resignation. Then, just constant anger.

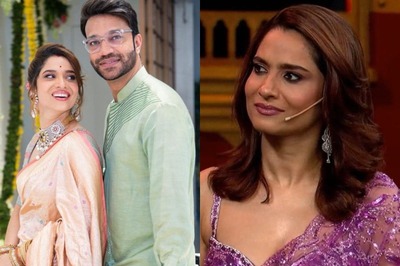

That is how Hana Mohsin Khan, a commercial pilot, felt after she first learnt that she had been “auctioned” on the app Sulli Deals, hosted on GitHub, a US-based software collaboration and hosting platform, in July 2020. “I used to be a really happy person before. All this happened because I am Muslim and a woman with an opinion,” she told Moneycontrol.

After six months of agony, when she was ready to put it all behind and start afresh in 2022, another app, Bulli Bai, surfaced, and over 100 women were auctioned on it on January 1. “I was not part of Bulli Bai, but it brings up memories and the sense that this will never stop. There is no progress, no hope,” she said.

What really irks her and several other women and digital rights activists, is the total lack of accountability from GitHub, where the two apps were hosted. “Platforms like GitHub need to take responsibility. We need more security and checks in place and it is high time something is done about it,” said Khan.

The recent incident has put the spotlight on the moderation, or lack thereof, on Microsoft-owned GitHub, and the need for transparency in how these issues are addressed.

GitHub

Founded in 2008 in the US, GitHub has been dubbed as a social network for developers. Millions of developers and hundreds of organisations use the platform to host software projects.

There are close to 73 million developers on GitHub, with 16 million joining in 2021 alone. The platform has about 5.8 million users in India, one of its fastest growing markets.

Every day hundreds of developers upload applications, and edit and collaborate with peers across the world. As a Bengaluru-based security researcher explains, unlike pictures or text, most of the information on the platform is in Java and other programming languages. The programming behind many of these applications is public, and that makes the platform vulnerable to moderation issues, not just in India but globally.

For instance, Sami, web developer, has elaborated on Twitter, how the Bulli Bai app used the same source code as Sulli Deals. “Whoever created the GitHub page ‘SulliDeals’ is also the same person who created the github page ‘BulliBai’ now. It seems they have rephrased the texts on the page, but it is the same code, same function with the name ‘sulli’ being used in code.”

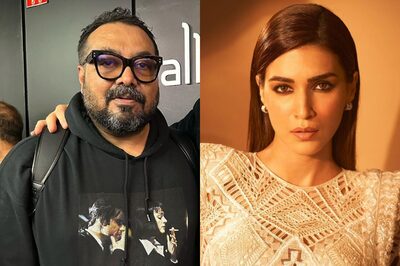

Raj Pagariya, a technology lawyer and partner-client relations, The Cyber Blog India, says there is a larger issue over the effort the platform makes to ensure that incidents that violate its community guidelines are not repeated, as is the case with Sulli Deals and Bulli Bai.

“When it happens once, it is understandable. But when it happens twice, there is an issue. The fact that the platform did not make enough of an effort to ensure there is no repeat is concerning,” he said.

Not once but twice

In July 2021 close to 80 women were auctioned on Sulli Deals, hosted on GitHub.

In the following days, FIRs were filed in Uttar Pradesh and New Delhi. But there has been no progress in the investigation of the case so far. Khan, who had filed the complaint in Noida, explained that even after repeated followups, no action has been taken and many of the victims have given up.

Getting cooperation from GitHub in criminal proceedings is also a challenge.

Anushka Jain, Associate Counsel, Surveillance and Transparency, Internet Freedom Foundation, explained that to get any information for criminal proceedings from GitHub, it has to be done through the MLAT agreement between India and the US. A mutual legal assistance treaty (MLAT) is an agreement between two or more countries for the purpose of gathering and exchanging information in an effort to enforce public or criminal laws.

Just when things were cooling down, Bulli Bai, another app hosted on Github, surfaced on January 1, with pictures of 100 women auctioned. The app was taken down immediately.

However, unlike last time, the backlash has been huge. An FIR filed in Mumbai saw quick action from the police as politicians stepped in. So far, four arrests have been made, all of them students — an engineering student from Bengaluru, two people from Uttarkand, and a man from Assam.

While the quick action by law enforcement has been heartening, many pointed out that this does not solve the core issue, which is, how GitHub addresses such issues.

A question of moderation

“If you look at platforms like GitHub, they are fairly large in size and for any large platforms, content moderation is a struggle,” said Pagariya.

“But even if you are large, one would expect that the platform would do something to prevent Sulli Deals from happening a second time. But that is not what happened; only 6 months later, it came up again,” he said.

Padmini Ray Murray, founder, Design Beku, a tech and design collective, told Moneycontrol that when two apps use similar code, the platform should have had checks so that another app is not created. “But they have not done anything, or if they did, we don’t know what it is. There is a need for more transparency,” she said.

Akancha S Srivastava, Founder, Akancha against Harassment, which works with law enforcement on cyberbullying, said: “For the platforms, just blocking them is not enough. They have responsibility and should take preventive action. Also the response from GitHub needs to be better.”

According to experts, it is high time platforms had better moderation tools, and become more transparent on how they address these concerns.

Content moderation

Content moderation in general is a slippery slope. But unlike social media platforms, where they need to comply with Indian rules and regulations, GitHub falls in a thorny area.

Under the new IT rules, all significant social media intermediaries should have a grievance, compliance and zonal officer appointed in India. From Facebook to local social media apps, they all now have local officers to cooperate with the government, and that users can approach with grievances.

But GitHub does not have similar officers posted in India and victims have to take the legal route to get information from the platform.

Recently, law student Amar Banka sent GitHub a legal notice on the issue. He posted the response he received from GitHub on Twitter. “Foreign enforcement officials wishing to request information from GitHub should contact the United States Department of Justice Criminal Division’s Office of International affairs. GitHub will promptly respond to requests that are issued via U.S. court by way of a mutual legal assistance treaty (MLAT) or letter rogatory.”

A digital rights activist, who did not want to be named, said MLAT is merely for show and does not work in most cases.

Moneycontrol sent detailed queries to GitHub on moderation, compliance with local laws, its grievance and compliance officer in India, and on the level of cooperation the platform is extending the government.

GitHub did not respond to the specific queries but shared a statement: “GitHub has longstanding policies against content and conduct involving harassment, discrimination, and inciting violence. We suspended a user account following the investigation of reports of such activity, all of which violate our policies.”

To be sure, GitHub has laid down policies restricting unlawful, defamatory, and abusive content targeting an individual or group, as was the case with Sulli Deals and Bulli Bai. The platform takes the content down when they violate community guidelines and are reported.

That brings up the point of whether moderating the programming language is the way to go, given that this isn’t the first time the company has run into trouble with moderation.

History of moderation issues

In 2014, India had blocked 32 sites, including GitHub, for hosting content related to ISIS. Globally the platform has come under scrutiny for hosting codes that allow people to create deepfakes. Deepfakes can be used to create non-consensual porn videos, which is a violation. The platform has also been censored in other countries, including China and Russia.

While victims have asked for better moderation, this raises questions over how far a platform should go to moderate content.

The security researcher cited earlier, who works for a Bengaluru-based unicorn, pointed out that actively moderating a code hosted by millions of developers is going to be a challenge since most of the information on the platform is in Java and other programming languages. “So, to moderate it, one has to go behind each and every project to ascertain if it violates policies or not. When there are hundreds of thousands of codes to go through, moderating each and every one is not feasible,” the researcher said.

Read all the Latest Tech News here

Comments

0 comment