views

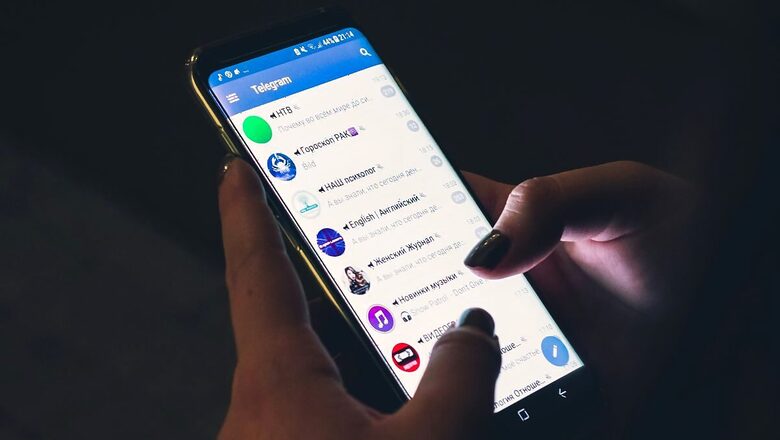

LONDON: Telegram boss Pavel Durov said on Friday that the messaging app would tackle criticism of its content moderation and remove some features that had been abused for illegal activity.

Durov, who was last week placed under formal investigation in France in connection with the use of Telegram for crimes including fraud, money laundering and sharing images of child sex abuse, announced the move in a message to his 12.2 million subscribers on the platform.

"While 99.999% of Telegram users have nothing to do with crime, the 0.001% involved in illicit activities create a bad image for the entire platform, putting the interests of our almost billion users at risk," the Russian-born tech entrepreneur wrote.

"That’s why this year we are committed to turn moderation on Telegram from an area of criticism into one of praise."

Durov did not spell out in detail how Telegram would achieve that. But he said it had already disabled new media uploads to a standalone blogging tool "which seems to have been misused by anonymous actors".

It had also removed a little-used People Nearby feature which "had issues with bots and scammers" and would instead showcase legitimate, verified business in the vicinity of users.

The changes are the first he has announced since being arrested last month in France and questioned for four days before being placed under formal investigation and released on bail.

The case has reverberated through the global tech industry, raising questions about the limits of free speech online, the policing of social media platforms and whether their owners are legally responsible for criminal behaviour by users.

Durov's lawyer has said it was absurd to investigate the Telegram boss in connection with crimes committed by other people on the app.

Katie Harbath, a former public policy director at Meta who now advises companies on technology issues, said: "It's good that Durov is starting to take content moderation seriously but, just like Elon (Musk) and other tech CEOs who run speech platforms have found, if he thinks this will be as simple as making a few small changes, he's in for a rude awakening."

Telegram has also removed language from its Frequently Asked Questions page saying that it does not process reports about illegal content in private chats because such chats are protected.

Durov did not refer to that change in his message, in which he also said that Telegram had reached 10 million paid subscribers.

In a previous post on Thursday, Durov said Telegram was not perfect. "But the claims in some media that Telegram is some sort of anarchic paradise are absolutely untrue," he wrote. "We take down millions of harmful posts and channels every day."

He said the French investigation was surprising, because authorities there could have contacted Telegram's EU representative, or Durov himself, at any time in order to raise concerns.

"If a country is unhappy with an Internet service, the established practice is to start a legal action against the service itself," he wrote.

(Additional reporting by Sheila Drang; Writing by Mark Trevelyan; Editing by Peter Graff)

Comments

0 comment